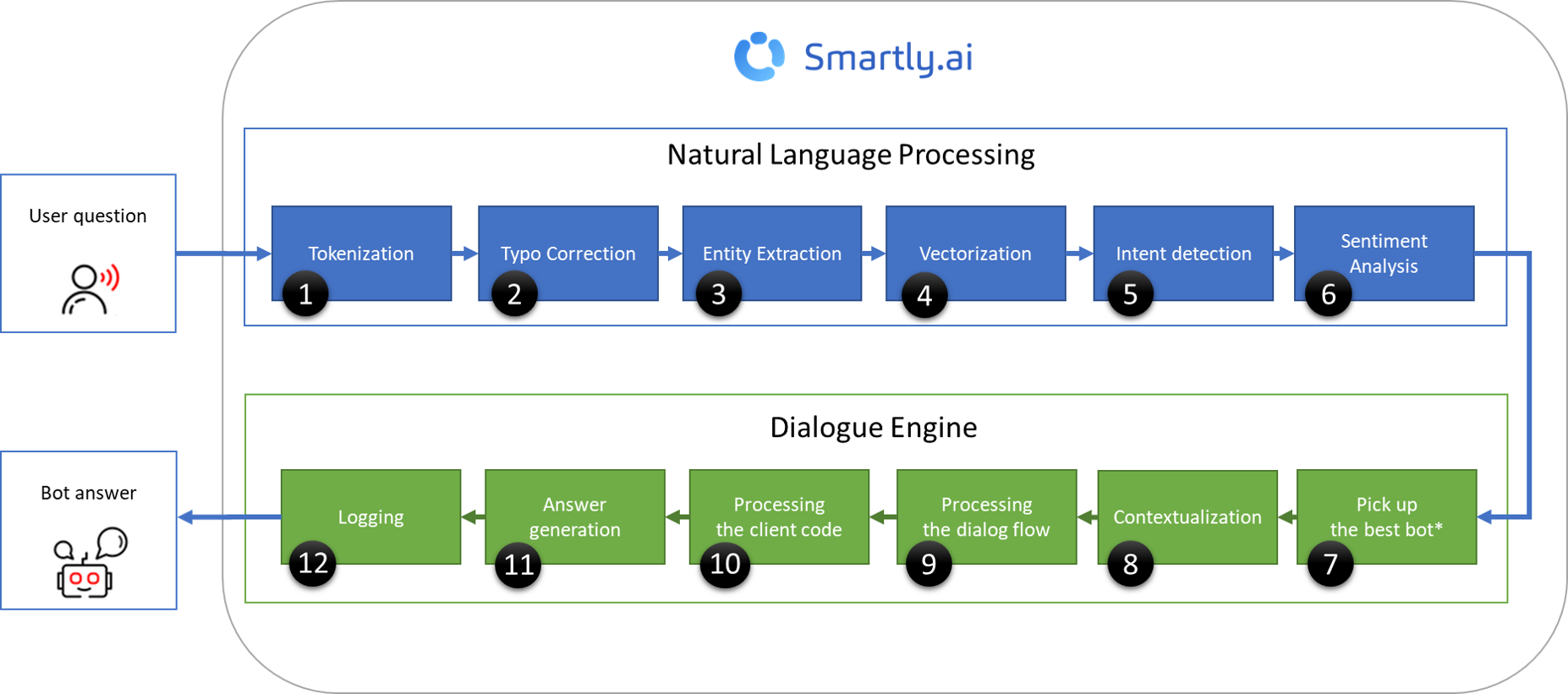

Overview

Below is a description of our AI pipeline.

1/ Tokenization

Tokenization is where we split an input string into different atomic parts, usually words.

One of the simplest form of tokenization can be defined as separation by spaces:

“This is simple.” -> [“This”, “is”, “simple.”].

This task can be quite complex with languages where back spaces are not here to help:

これは簡単です

2/ Typo Correction

Typo Correction is the process detecting and providing suggestions for incorrectly spelled words in a text. Our approach is to handle this in a proactive way by adding simulated typos errors in the trained dataset to teach bots some common and previsible errors that your users can come with.

Note: Typo correction is not necessary if you are using a modern vectorizer such as the "CBOW + Bigram" vectorizer

3/ Entity Extraction

Entity extraction is using two different approaches:

a) Custom entity extraction: Extracts a relevant information from a text, this information being learned to the bot by a list of element and their synonyms that you have provided.

b) System entity extraction: Extracts time, numbers, ect. Full list available here. This information is provided and trained by Smartly.AI for all the users of the platform.

4/ Vectorization

Because Natural Language Processing is a lot of Maths, we use Vectorization to transform the user request to a mathematical object.

For example, if our dictionary contains the words {Smartly.AI, is, the, best, great}, and we want to vectorize the text “Smartly.AI is great”, we would have the following vector: (1, 1, 0, 0, 1).

It's a bit more complex, but you got the idea 😉

We currently offer two vectorizers:

- TF-IDF: A very basic but efficient algorithm, the only limitation is that this vectorizer is using words with no linguisitic context, those of the word is present you will get a 1, if it absent you get a 0. This binary behaviour lacks flexibility.

- FasText: This particular vectorizer offers the advantages of his grandfather TF-IDF plus some additional flexibility.

- Typos robustness: To a certain extent, this vectorizer offer more flexibility to typos by looking at group of characters instead of full words AND by looking at what is before and after each group of characters.

- Synonym handling: The fact that this vectorizer is using embeddings to vectorize inputs allows to accept words that were not necessary in the training data set

5/ Intent Detection

One of the key component of the platform!

Basically, it compares the input vector to a set of pre-trained vectors (intents) to find the one that is the most similar. The result of this operation is the best intent candidate and a confidence score.

6/ Sentiment Analysis

Extracts sentiment polarity (positive or negative) from user input.

For more on this, please visit the Sentiment Analysis section.

7/ Pick up the best bot (Master Bot)

If you are using a Master Bot, the dialogue engine will decide to witch bot the user request should be delegated and when to switch to another one.

8/ Contextualization

When we receive a user request, before processing it, we retrieve all the context.

Some elements of this context are:

- last active state,

- last user request / bot answer

- short term memory,

- long term memory,

- user data ( if for example the user is coming from Messenger )

9/ Dialog flow processing

Once we have an understanding of what the user wants and some elements of context, the dialog engine takes all this and navigate in the dialog flow to find the next best dialog state to activate.

10/ Custom code processing

If the answer requires some custom code execution, a docker is allocated in our infrastructure for this code to be executed. This can be executed in a time 90 seconds time slots

11/ Answer generation

The answer generation prepares the response of the bot according to what you have defined in your dialog flow, the context and your custom code.

12/ Logging

All this exchange is logged in the platform database so you can monitor and improve your bot later.

Updated 5 months ago