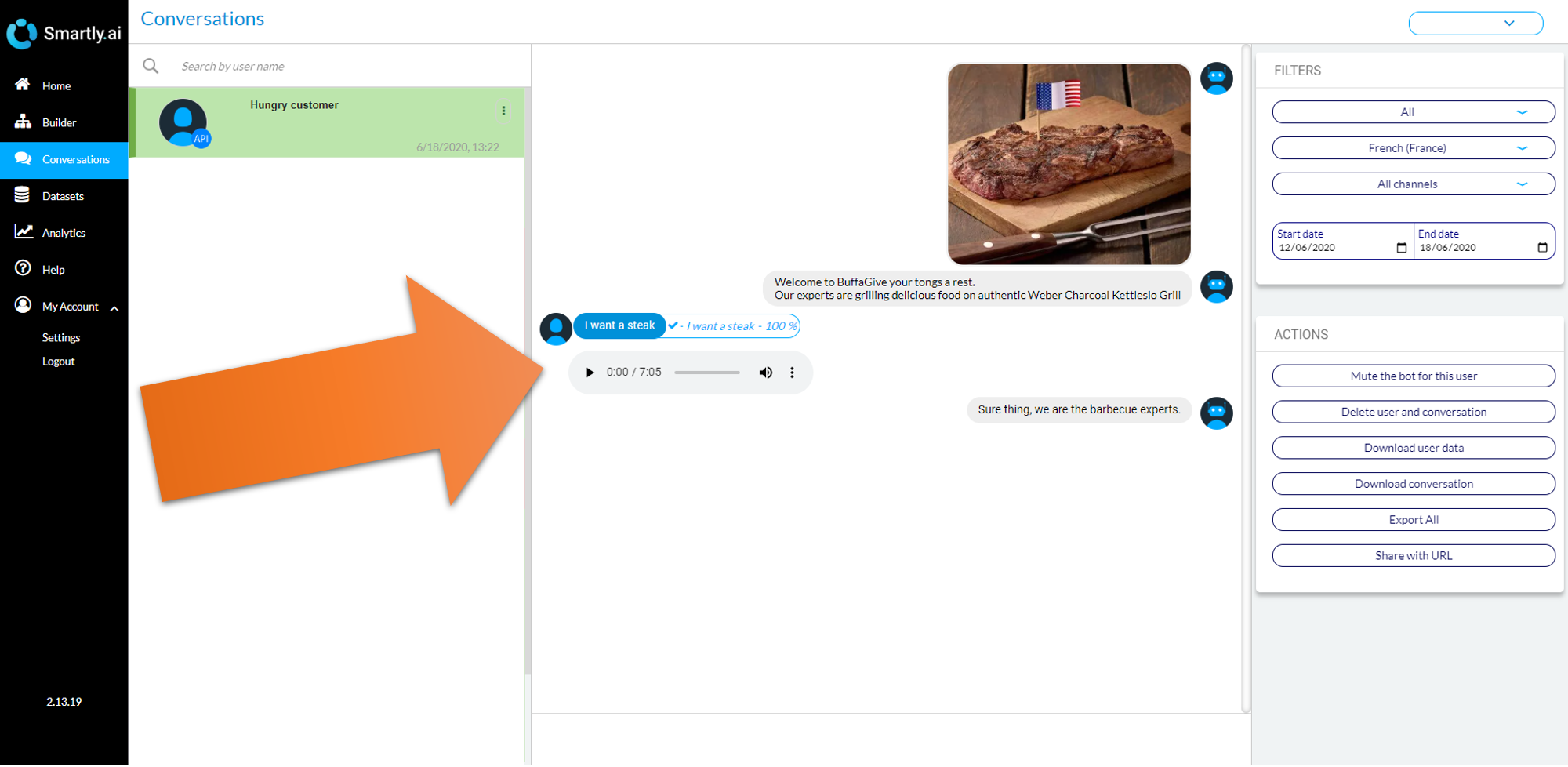

Audio files

Listening to audio inputs in the Conversation module

While working on a voice assistant involving multiple technologies (ASR, NLU, Dialog, TTS, ...) it is sometimes hard to debug issues in conversations. Did the user has an accent? Was the user in a noisy environment? Is the transcription performing well? You can find out answers to those questions by sending out audio recordings references as metadata to the user input .

In this case, all you have to do is to send the input file url via the user_data object of the /dialog API.

More specifically, all you need to do is to add an object called user_data.input_file with the following syntax

"user_data":{

"input_type":"voice",

"input_file": {

"type":"audio",

"fileUri":"YOUR AUDIO FILE URL HERE",

"text":"TEXT TRANSCRIPTION OF YOUR AUDIO FILE URL HERE",

"confidence":0.8219771,

"lang":"fr-fr"

},

}If you do so, you will see an audio player in the conversation module, allowing you to listen to the audio file, and compare it to the transcription.

Only on user inputsThis feature only works for new user inputs, thus use it only with the

NEW_INPUTevents of the\dialogAPI.

in this case you will be able to listen to both the audio input and the audio output

Updated 5 months ago