May 2024

almost 2 years ago by Hicham TAHIRI

Version 3.11.2

Enhanced LLM based NLU

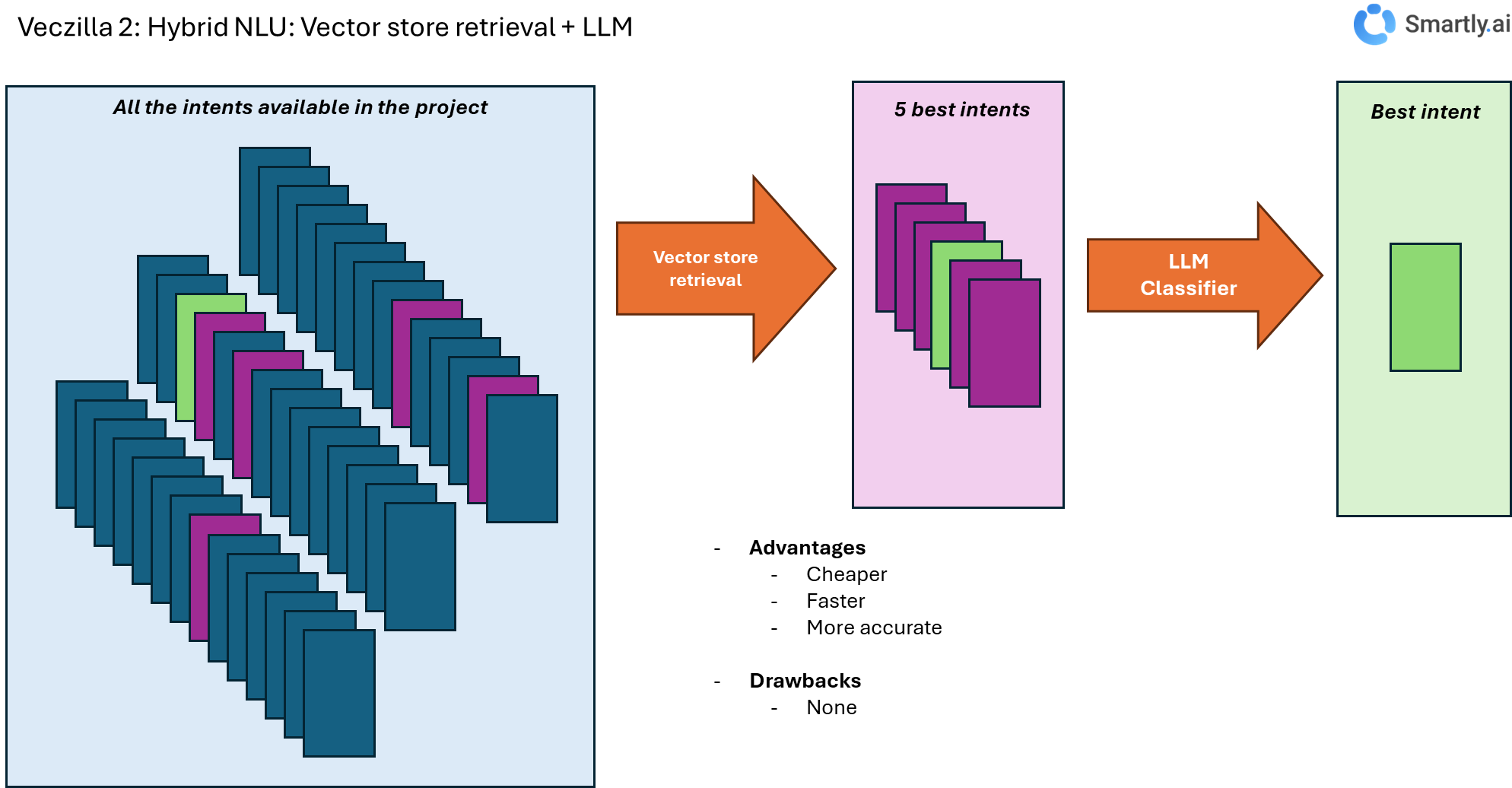

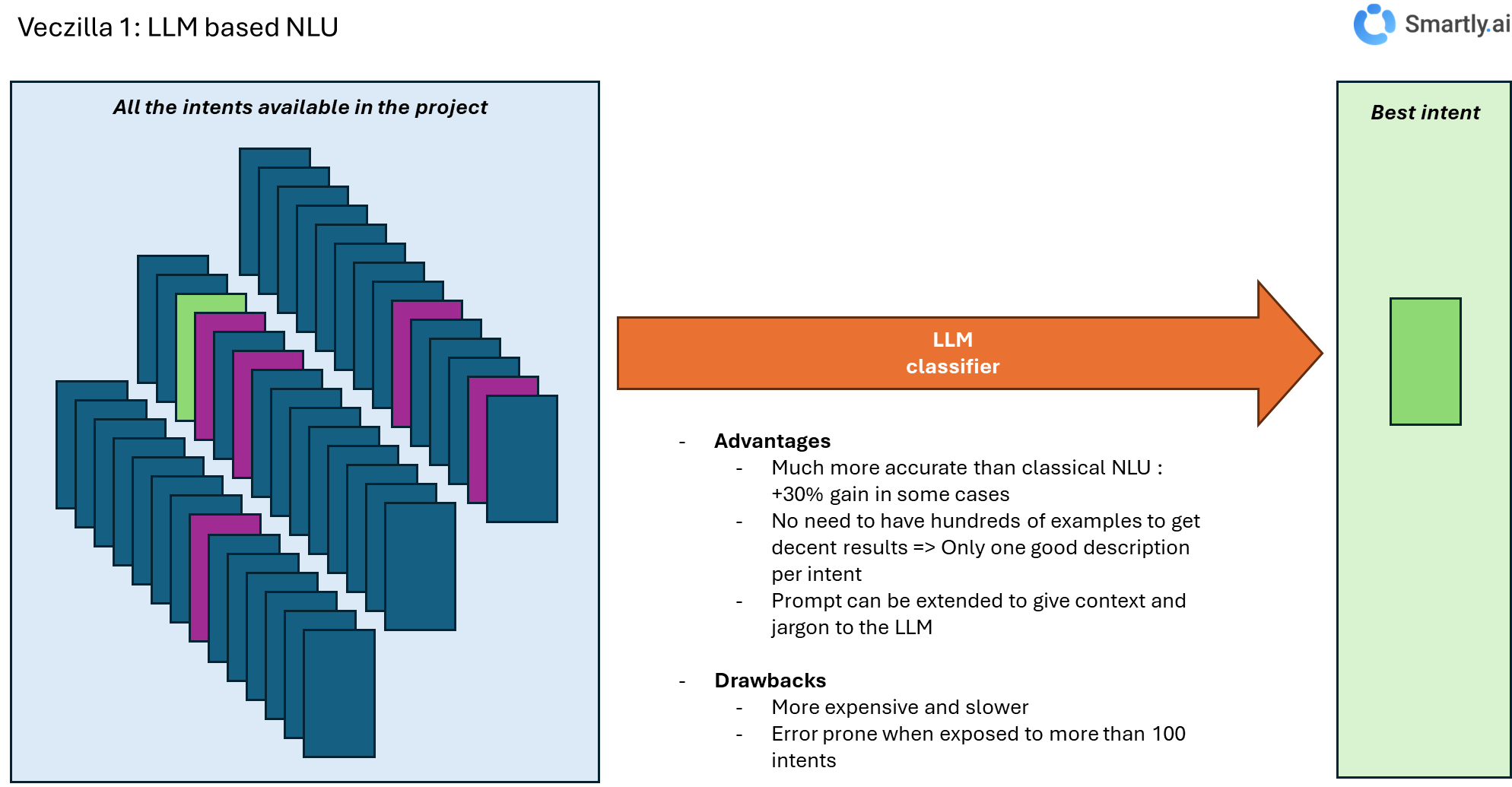

We have improved the LLM based NLU by adding an intent retrieval stage that selects the N best candidates before sendin send to the LLM. This reduces dramatically the inference cost and latency. The figure below show you how it works.

Our previous implementation of the LLM based Classifier (Veczilla 1)

Our new implementation (Veczilla 2)