March 2024

Version 3.9.6

🗓 Release Schedule

- Smartly.AI Platform Update: Shipped

- Virtual Agent Update: Shipped

Security Update & API Updates

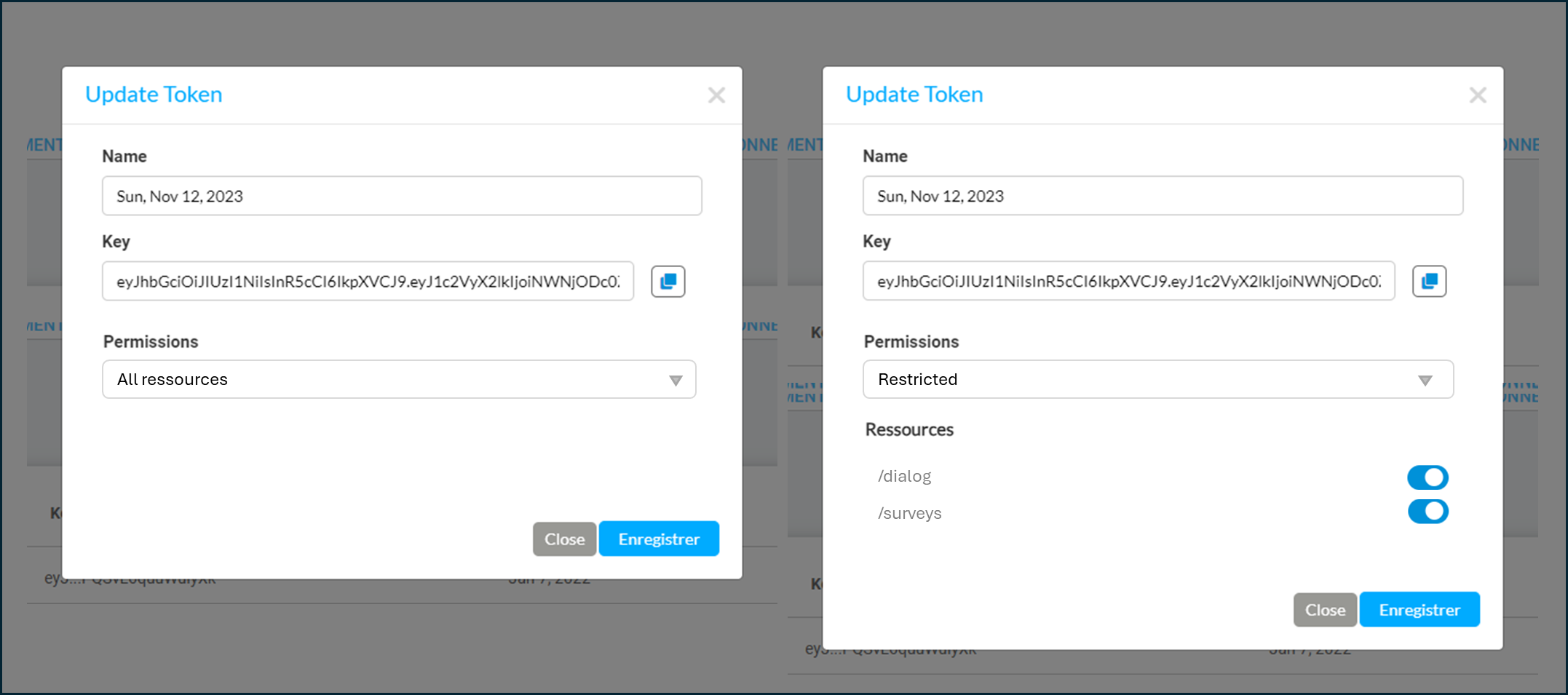

Scope-limited API tokens

We've introduced the ability to restrict the scope of an API token. Traditionally, an API token grants access to all resources. With the new restricted mode, you can specify which resources your API token can access, enhancing security and control.

Scope limited API tokens

Best Practices for API Token Security and Permission ManagementTo enhance security, we strongly recommend concealing your API token to ensure it is never exposed in front-end or mobile applications. Opting for back-to-back communication is the best practice to safeguard your data. If your usage primarily involves the

/dialogand/surveysroutes, adopting the restricted permission mode for your APIs is advisable, as it significantly reduces the risk of unauthorized access by limiting resource exposure to what's essential. However, should your needs extend beyond these routes, retaining an unrestricted scope might be necessary. This approach not only secures your system but also bolsters trust with your users by demonstrating a commitment to data protection.

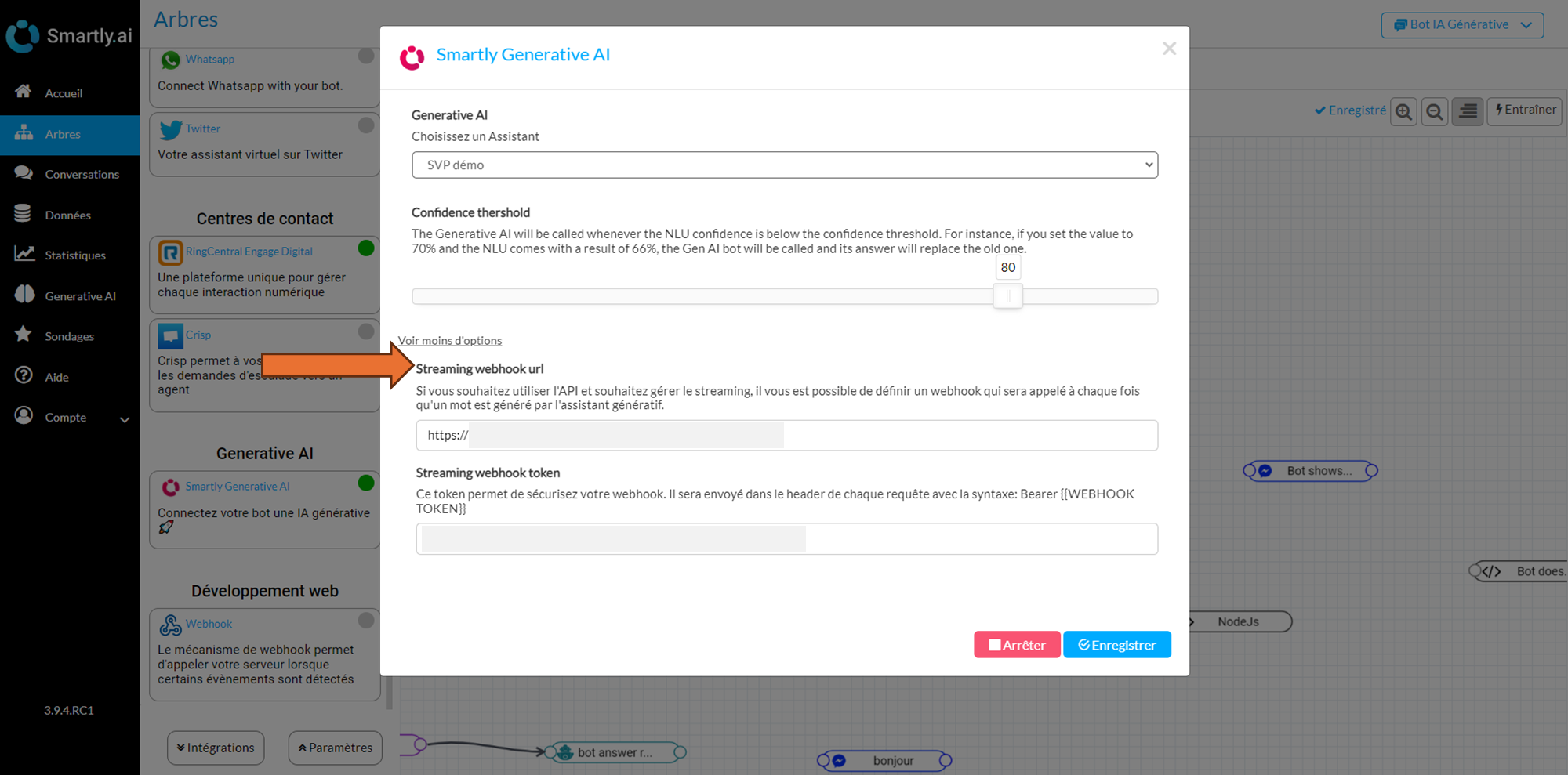

Live Response Streaming

We're excited to announce streaming support for assistants, enabling real-time responses for generative assistants via our API. Set the streaming field to true in the /dialog API, specify a webhook for callbacks at each new token, and receive responses instantly. Detailed setup instructions are available in our Smartly.AI Dialog API documentation.

To configure your Generative AI webhook, navigate to Builder > Integrations > Webhook panel and input the URL for callbacks after each generated token.

Integrate streaming capability into your apps thanks to our webhook service

The webhook provides updates at various stages:

Before answer generation:

{

user_id: 1234, // The user that should receive this updated message

sentence: "One moment please...",

rich_message: null,

time: 2024-03-22T06:35:49.038Z,

status: "waiting for instructions"

}During answer generation

{

user_id: 1234, // The user that should receive this updated message

sentence: "Hello, I'm here",

rich_message: null,

time: 2024-03-22T06:35:49.038Z,

status: "in progress"

}Once answer generation is completed

{

user_id: 1234, // The user that should receive this updated message

sentence: "Hello! I'm here to help. What do you need assistance with today?",

rich_message: null,

time: 2024-03-22T06:35:49.038Z,

status: "completed"

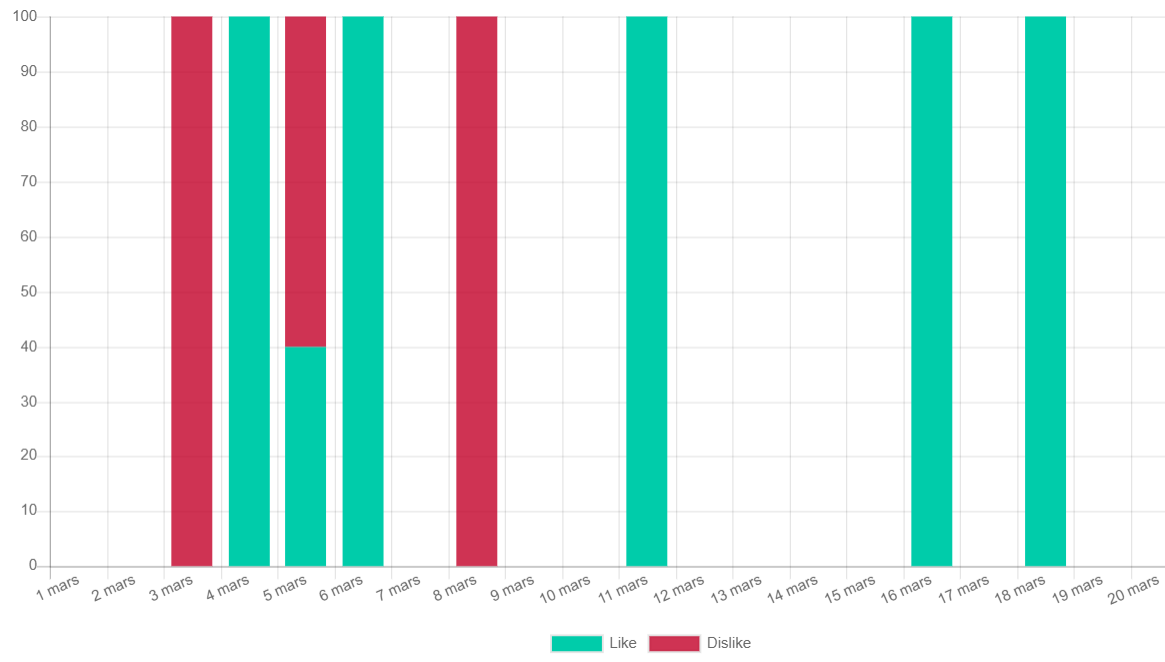

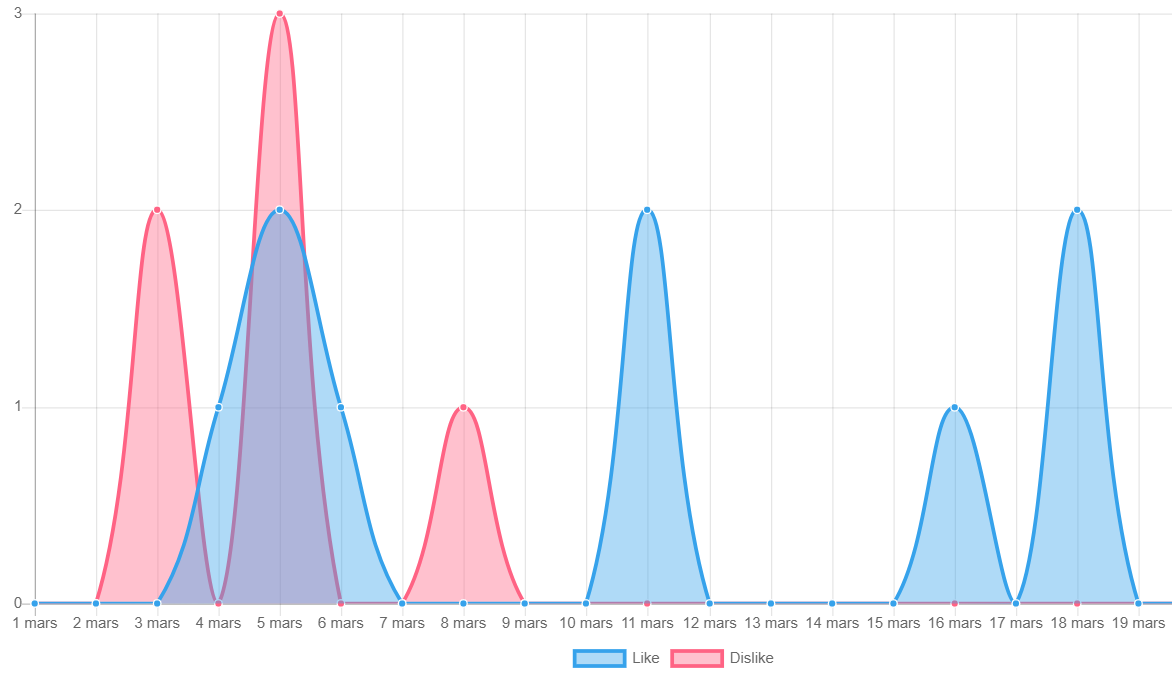

}Analytics: Response Vote Satisfaction and Date Format

- New User Satisfaction Charts: Track the evolution of customer satisfaction through response surveys, with visuals for both positive and negative feedback trends.

Positive / Negative vote evolution (%)

Positive / Négative feedback evolution (total)

-

Date Format Update: We've changed the date format from "MM/DD" to "D MMM" (e.g., "4 Feb") to ensure clarity across all regions.

-

Conversations Module Enhancement: Integration of hybrid Natural Language Understanding (NLU) for improved conversational analysis through detected intentions.

-

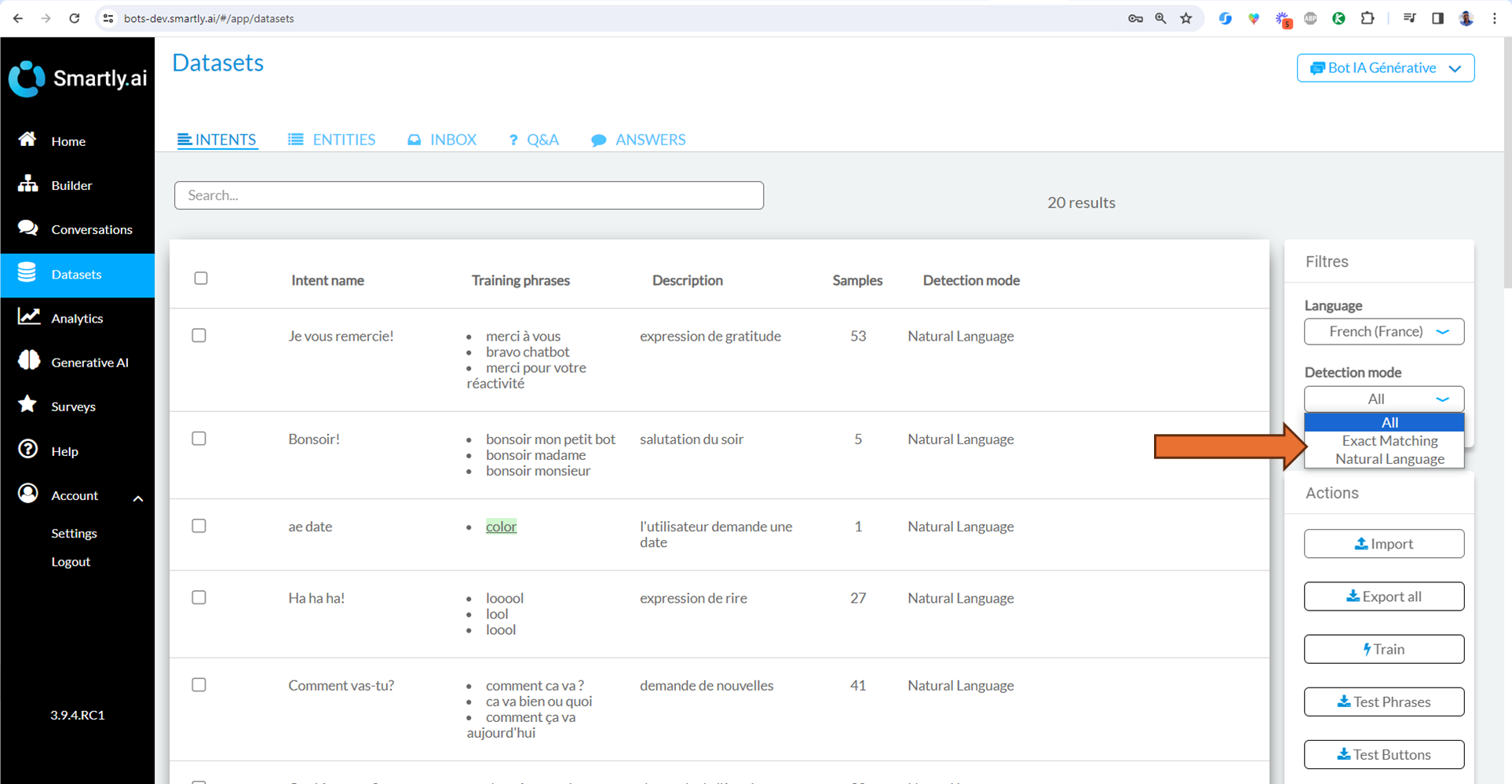

Dataset Module Enhancement: Now supports filtering intents by type, allowing for more precise intent management. This update enhances the ability to distinguish between exact matches and natural language processing (NLP) matches.

Intent type filter

- Hybrid NLU: Improved the intent description generation process for enhanced intent detection accuracy, better aligning with training data language.

Generative AI:

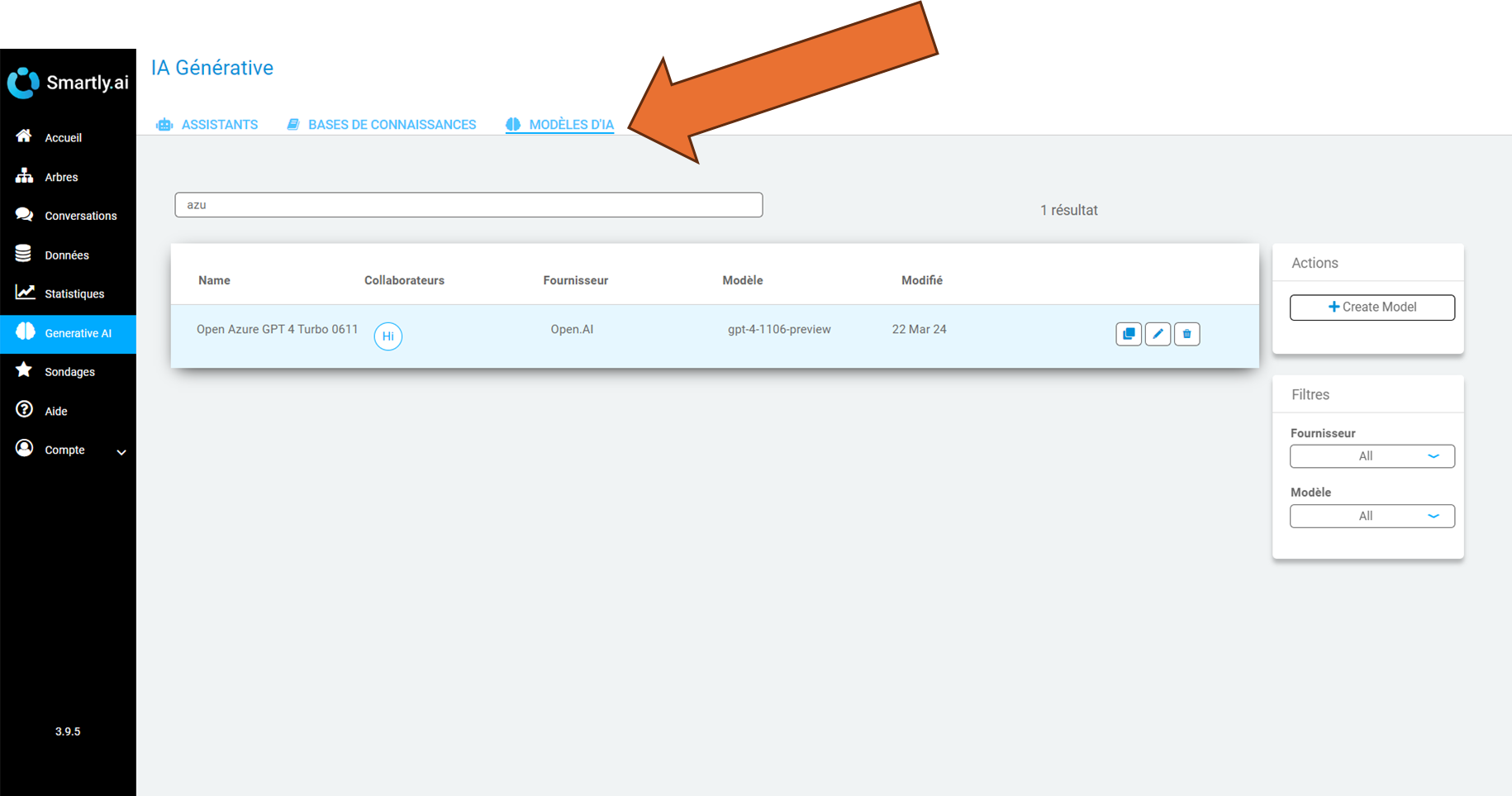

- Centralised LLM Integrations: A unified interface for managing Large Language Model (LLM) integrations, simplifying the process by eliminating the need to replicate API tokens and LLM information across different assistants and systems.

Centralised LLM Integrations

- Assistant Type Definition: Upcoming feature to allow users to specify the type of assistant they require, with options including:

- RAG (Default): Combines custom instructions with a knowledge base.

- Prompt Only: Based solely on custom instructions.

- Additional Types: More options will be announced in the future.

- Mistral AI Update: Introduced the latest model,

mistral-large-latest.

Infrastructure

We are excited to announce that we have successfully migrated our platform to Microsoft Azure, with all resources now located in France, ensuring full compliance with security and GDPR standards. This transition includes our primary platform, accessible at https://bots.smartly.ai. However, please note that our Virtual Agent platform, found at https://bots-virtual-agent-enriched.smartly.ai/, is scheduled for migration shortly. Dedicated communication regarding this maintenance operation will be provided to Virtual Agent users in due course.

This strategic move from our current cloud provider, Flexible Engine, to Microsoft Azure is driven by two primary considerations: firstly, the impending discontinuation of Flexible Engine requires us to seek an alternative solution; secondly, the wealth of Large Language Models (LLMs) and Generative AI resources available on Azure aligns with our increasing focus on these technologies. We believe this transition marks a positive step forward for our services and our users.